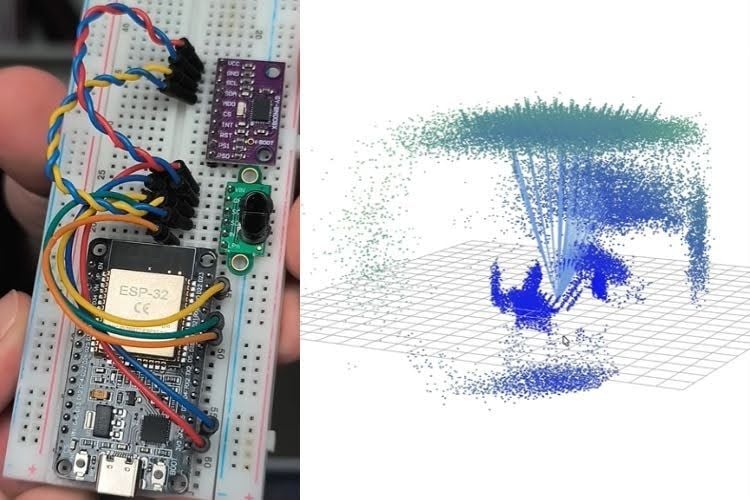

What if a small sensor could understand space in three dimensions? A maker recently showed how a Time-of-Flight (ToF) sensor can be turned into a basic 3D scanner. The sensor sends out infrared light and measures how long it takes to return, a method known as time-of-flight distance measurement. By using a multi-zone depth array, it captures several distance readings at the same time, creating an initial layer of depth information.

To make sense of this depth data, movement and direction must also be tracked. An Inertial Measurement Unit (IMU) which includes an accelerometer and gyroscope records how the scanner is tilted and moved. Through sensor fusion, the system combines motion data with depth readings to place each measurement correctly in space. The result is a growing point cloud, which is cleaned up using noise filtering so the scanned environment becomes clearer and more accurate.

This project shows how simple hardware and smart processing can create powerful results. Using a microcontroller to manage the data, the system performs 3D mapping and spatial reconstruction in real time. While the technology behind it involves concepts like depth sensing and orientation tracking, the idea itself is easy to understand: move the scanner around, collect distance data, and gradually build a 3D view of the surroundings. It’s a great example of how technical knowledge can be applied in an accessible and creative way.