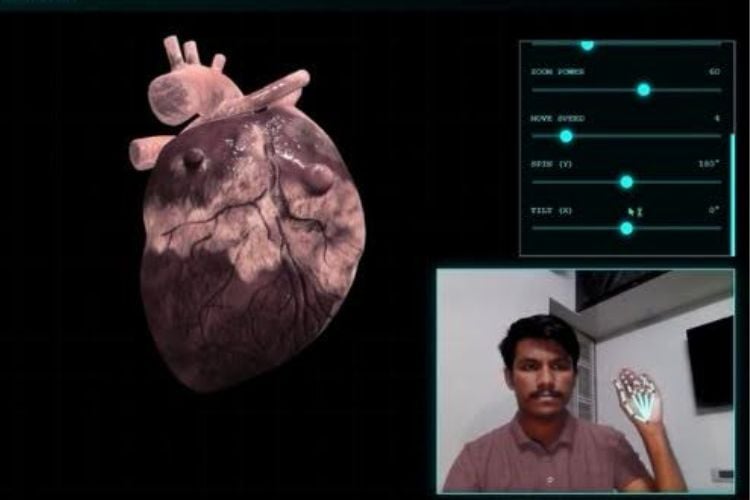

A biomedical engineering student, Sudarshan VasanthaKumar, has developed a vision-based, touchless gesture interface designed for use in sterile medical environments. The system allows surgeons to rotate, zoom, and inspect 3D medical models without touching a mouse, keyboard, or screen, addressing a challenge in operating rooms where sterility is critical.

The project, called Bio-Vision, runs entirely in a web browser and uses a standard webcam for input. It relies on Google MediaPipe AI-based hand tracking with 21 landmark points to recognize gestures, while a Three.js 3D rendering engine displays anatomical models in real time. Importantly, the system does not require specialized hardware such as depth cameras, VR headsets, or motion controllers, making it accessible and low-cost.

The long-term goal of the project is to democratize medical imaging by enabling touch-free interaction on affordable laptops, particularly in resource-limited rural clinics. While current development focuses on gesture-based control of existing 3D models, future versions aim to support real-time medical scan integration, further expanding its potential impact in surgical workflows.