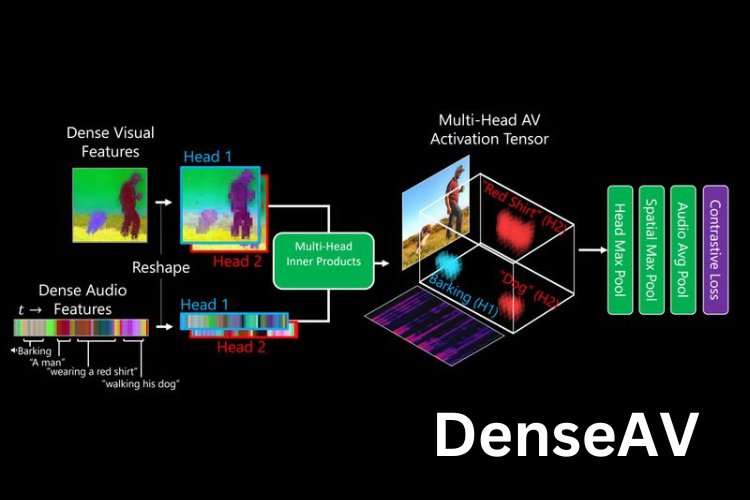

We all know there are many projects available that can recognize languages, sounds, images, objects, etc. But today, we're going to meet DenseAV, which has learned to do all of these at once. Developed by a group of researchers at MIT, DenseAV can be technically defined as a novel dual encoder grounding architecture. It learns high-resolution, semantically meaningful, and audio-visually aligned features solely through watching videos.

In the GIF above, you can see DenseAV in action. It seamlessly recognizes both audio and video. For instance, if it detects the sound of dogs barking, it instantly identifies the dogs in the video frames. Similarly, it recognizes speech and understands each word, highlighting those in the video. You can see highlights for the words "puppies" and "snow." The GIF provides a practical understanding of how DenseAV works. More examples are available on the official DenseAV Website.

The most amazing thing is that all of this happens without any localization supervision. That's why DenseAV is described as self-supervised visual grounding of sound and language by the authors. With its advanced ability to understand and connect visual and audio information from videos, DenseAV has numerous potential applications across various fields like multimedia content analysis, security and surveillance, healthcare, education, entertainment and media, robotics, autonomous systems, and more. Projects like these pave the way for a more advanced future.

Even better, this project is open-source and available for all. They have also created an online demonstration platform where you can test the module by uploading your own videos. Check it out and see how DenseAV can transform your understanding of audio-visual integration!