This is a very interesting project in which we are going to learn how to implement virtual reality using Arduino and Processing. For most of us, the movie Iron man by Jon Favreau has always been an inspiration to build new things that will make our life easy and more fun. I have personally admired the Techs that are shown in the movie and have always wanted to build something similar to that. So, in this project I have tried to mimic the Virtual reality stuffs that happen in the movie, like we can simply wave our hand in front of the computer and move the pointer to the desired location and perform some tasks.

Here I will show you how you can simply wave your hand in front of webcam and draw something on your computer. I will also show you how you can toggle lights by virtually moving your hand and making clicks with your fingers in the air.

Concept:

To make this happen we have to leverage the power of Arduino and Processing combined. Most of would be familiar with Arduino, but Processing might be new for you. Processing is an application just like Arduino and it is also Open source and free to download. Using Processing you can create simple system applications, Android applications and much more. It also has the ability to do Image Processing and Voice recognition. It is just like Arduino and is much easy to learn, but do not worry if you are completely new to processing because I have written this tutorial fairly simple so that anyone with interest can make this working in no time.

In this tutorial we are using Processing to create a simple System application which provides us an UI and track the position of our hand using Image processing. Now, we have to make left click and right click using our fingers. To make that happen I have used two hall sensors (one on my index finger and the other on middle finger) which will be read by the Arduino Nano. The Arduino also transmits the click status to the Computer wirelessly via Bluetooth.

It might sound complicated but, Trust me; it is not as hard as it sounds. So let us take a look at the materials needed for this project to be up and running.

Materials Required:

- Arduino Nano

- Hall sensor (A3144) – 2Nos

- A small piece of magnet

- Bluetooth Module (HC-05/HC-06)

- 9V battery

- Connecting Wires Dot board.

- A pair of gloves

- Arduino IDE (Software)

- Processing IDE(Software)

- A Computer with Webcam and Bluetooth (you can also use external Bluetooth or Webcam for your computer)

Schematics and Hardware:

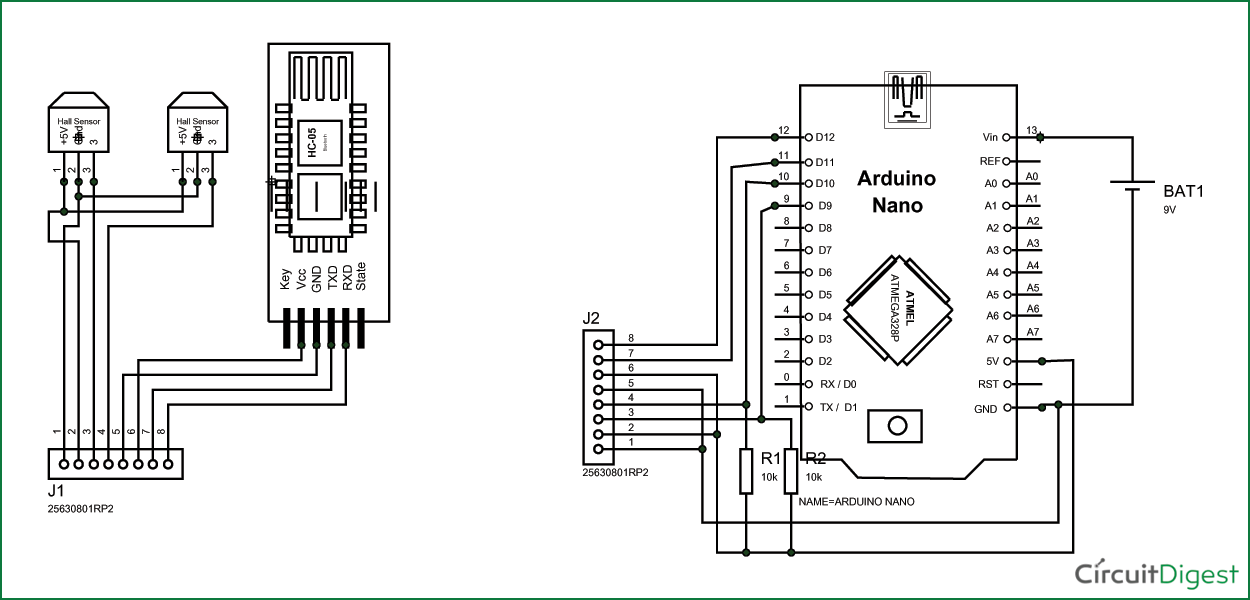

The hardware part of this project is very simple and easy to build. The complete schematic is shown below.

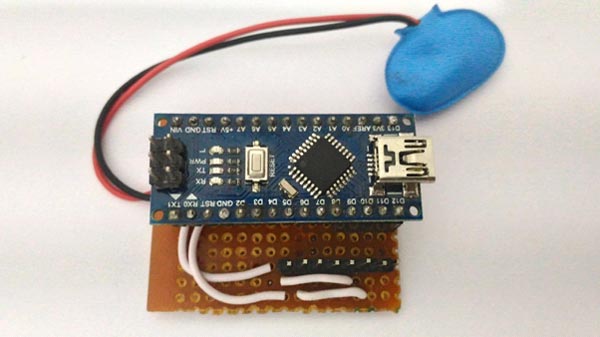

The Arduino, resistors and the berg stick pins are soldered onto a dot board as shown below.

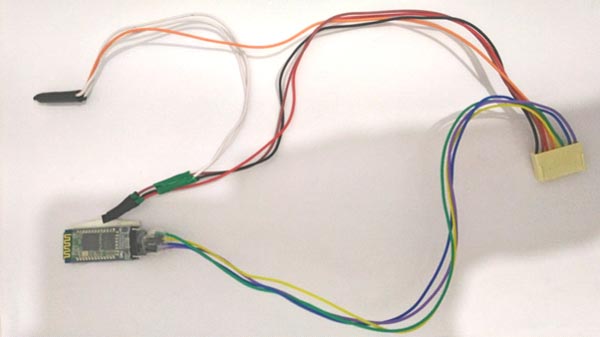

The hall sensor and the Bluetooth module is soldered to a connector wire as shown below.

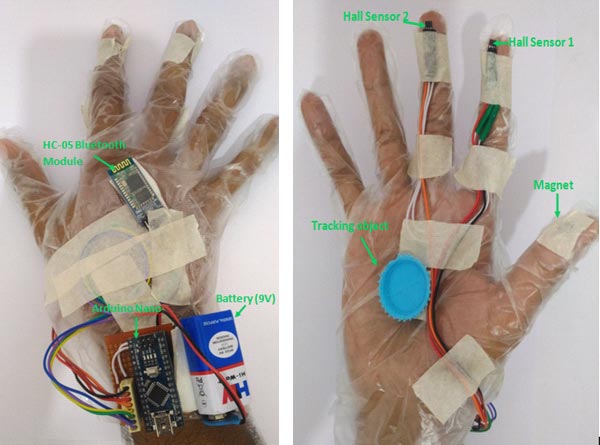

Once these two sections are ready it can be assembled onto gloves so that it is easy to use. I have used disposable plastic gloves which can be purchased from any medical shop near you. You should make sure that the magnet comes on your thumb finger and the hall sensor 1 and hall sensor 2 should be present before your index and middle finger respectively. I have used duck tapes to secure the components in place. Once the components are assembled it should look something like this.

Now let us open the Arduino IDE and start programming.

Program for Arduino:

The purpose of this Arduino code is it to read the status of the hall sensor and broadcast them using the Bluetooth module. It should also receive data from Bluetooth and toggle the onboard LED based on the incoming value. The complete program is given at the end of this tutorial; I have also explained few lines below.

if (Phs1!=HallState_1 || Phs2!=HallState_2) //Check if new keys are pressed

{

if (HallState_1==LOW && HallState_2==LOW)

Aisha.write(1);

if (HallState_1==HIGH && HallState_2==LOW)

Aisha.write(2);

if (HallState_1==LOW && HallState_2==HIGH)

Aisha.write(3);

if (HallState_1==HIGH && HallState_2==HIGH)

Aisha.write(4);

}

As shown in the above lines based on the status of the hall sensor the Bluetooth will write a particular value. For example if hall sensor 1 is high and hall sensor 2 is low, then we will broadcast the vale “2” via the Bluetooth module. Make sure you write the values to the BT module and not print them. Because it will be easy to read the only on Processing side only if they are written. Also the value will only send if it is not as same as the previous value.

if (BluetoothData=='y') digitalWrite(ledpin,HIGH); if (BluetoothData=='n') digitalWrite(ledpin,LOW);

These lines are used to toggle the onboard LED which is connected to the Pin 13, based on the value receive by the BT module. For example if the module receives a ‘y’ then the LED is turned on and if it receives an ‘n’ then it is turned off.

Program for processing:

The purpose of the Processing program is to create a system application which can act as an UI (User interface) and also perform image processing to track a particular object. In this case we track the blue object that we stuck to our gloves above. The program basically has four screens.

- Calibration Screen

- Main Screen

- Paint Screen

- LED toggle Screen

We can navigate from one screen to another by simply waving our hands and dragging screens on air. We can also make clicks on desired places to toggle LED or even draw something on screen.

You can copy paste the complete Processing program (given at the end) and modify it based on your creativity or simple download the EXE files from here, and follow the following steps to launch the application.

- Install JAVA in your computer if you have not installed it before

- Install You Cam perfect on your computer

- Power up your Arduino and pair your Computer with the Bluetooth Module

- Launch the application file

If everything goes fine you should be able to notice the LED on your Bluetooth module getting stable and your webcam light going ON. If you have any problems reach me through the comment section and I will help you out.

Watch the video at the end to know how to calibrate your application and use it.

If you want to modify the code and build more features into this then you can use the following insights of the program

The processing IDE can be downloaded from here. If you want to learn more about processing and create more interesting projects then you can visit the tutorials here.

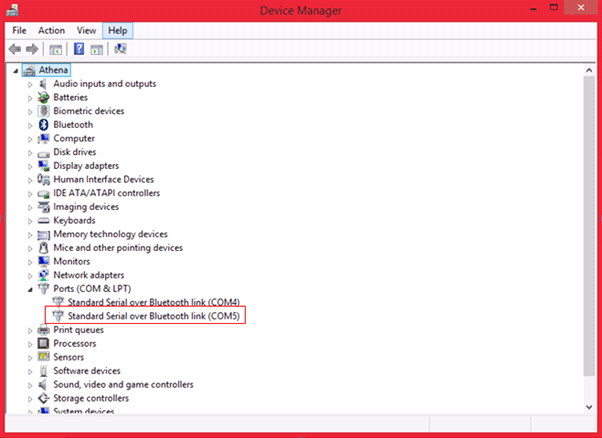

Processing has the ability to read Serial data, in this program the serial data is comes from the Bluetooth COM port. You have to select which COM port your Bluetooth is connect to by using this line below

port = new Serial(this,Serial.list()[1],9600);

Here I have selected my 1st COM port which is COM5 in my case (see image below) and I have mentioned that by Bluetooth module runs on 9600 baudrate.

As said earlier processing also has the ability to do image processing, in this tutorial the images are sent inside the sketch using a webcam. In each image we track for a particular object. To know more about this you can visit this tutorial.

I have tried my best to explain the program (given at the end) through the comment lines. You can download the files here.

If you want to know more about the sketch you can reach me through the comment section and I will help you out.

Working:

Once the Hardware and software is ready, wear the gloves and get ready for some action. Now, simply power the Arduino and then launch the Application. The led on the Bluetooth module should go stable. Now it means that your System application has established a Bluetooth link with your Arduino.

You will get the following screen where you have to select the object to be tracked. This tracing can be simply done by clicking on the object. In this case the object is the Blue disc. Now you can move your object and notice that the pointer follows your object. Use a unique colour object and a bright room for best results.

Now touch your thumb finger with index finger and you should see the message “Key 1 Pressed” and the when you press your thumb with middle finger you should see “Key 2 Pressed” this indicates that everything works fine and the calibration is over. Now click on the Done button.

Once the Done button is pressed you will be directed to the main screen where you can paint on air or toggle the LED on the Arduino Board as shown in the Video below.

Complete Project Code

#include <SoftwareSerial.h>// import the serial library

SoftwareSerial Aisha(11, 12); // TX, RX

int ledpin=13; // led on D13 will show blink on / off

int hall_1=9;

int hall_2=10;

int BluetoothData; // the data given from Computer

int HallState_1,HallState_2;

int change;

int Phs1,Phs2;

void setup()

{

Aisha.begin(9600); //Bluetooth Module works at 9600 baudrate

pinMode(ledpin,OUTPUT); //led pin as output

pinMode(hall_1,INPUT); //hall sensor 1 as input

pinMode(hall_2,INPUT); //hall snesor 2 is also input

}

void loop()

{

if (Aisha.available()) //if data is sent from laptop

BluetoothData=Aisha.read(); //read it and store it in BluetoothData

Phs1=HallState_1;

Phs2=HallState_2;

HallState_1 = digitalRead(hall_1);

HallState_2 = digitalRead(hall_2);

if (Phs1!=HallState_1 || Phs2!=HallState_2) //Check if new keys are pressed

{

if (HallState_1==LOW && HallState_2==LOW)

Aisha.write(1);

if (HallState_1==HIGH && HallState_2==LOW)

Aisha.write(2);

if (HallState_1==LOW && HallState_2==HIGH)

Aisha.write(3);

if (HallState_1==HIGH && HallState_2==HIGH)

Aisha.write(4);

}

if (BluetoothData=='y')

digitalWrite(ledpin,HIGH);

if (BluetoothData=='n')

digitalWrite(ledpin,LOW);

}

//----------- Arduino code ends---------------//

//------------Processing code starts-----------//

import processing.video.*; // Import Librarey to use video

import processing.serial.*; //Import Librarey to use Serial Port (Bluetooth)

//**Global Variable Declarations**//

Serial port; //port is an object variable for Serial communication

int data;

boolean calibration= false;

int mirror =0;

int mirrorn =-1;

PImage Done,Aisha,Paint,LED_Toggle,LED_on,LED_off;

boolean key1,key2,key3,movePaint,PaintScreen,PaintScreenClear,moveLED,LEDscreen;

float Paintx,Painty,avgX,avgY,LEDx,LEDy;

int count;

PImage img = createImage(380, 290, RGB);

int Px,Py;

Capture video; //create an object named video

color trackColor; //variable to store the color that we are going to track

float threshold = 50 ; //can be varied by the user

//_____End of variable declaration______//

//*Function to load all the images from data folder of the sketch*//

void loadImages()

{

Done = loadImage("Done.png");

Aisha = loadImage ("Aisha.png");

Paint = loadImage("Paint.png");

LED_Toggle = loadImage("LED_Toggle.png");

LED_on = loadImage("LED_on.png");

LED_off = loadImage ("LED_off.png");

}

//_____End of variable declaration______//

//**Executes only ones**//

void setup() {

size(800, 600);

loadImages();

String[] cameras = Capture.list();

printArray(cameras);

video = new Capture(this, cameras[34]);

video.start();

key1=key2=key3=false;

Paintx=width/10;

Painty=height/8.5;

LEDx=width/1.1;

LEDy=height/8.5;

movePaint=PaintScreen=PaintScreenClear=moveLED=LEDscreen=false;

port = new Serial(this,Serial.list()[1],9600);

println(Serial.list());

}

//**End of Setup**//

//**Triggered to update each frame of the video**//

void captureEvent(Capture video) //when a new image comes in

{ video.read(); } //reas it as a video

//*Function to point which color to Track*//

void Calibrate()

{

image(video,0,0);

imageMode(CORNERS);

image(Done,width/1.2,height/1.1,width,height); //position of the Done button

if (mouseX>width/1.2 && mouseY>height/1.1) //If mouse is within the Done button

{

calibration=true;

cursor(HAND);

mirrorn=1;

mirror=width;

}

fill(#1B96E0);

textSize(20);

if (key1==true) //if hall sensor 1 is active on Arduino

text("Key-1 Pressed",width/12,height/1.05); //Text and its position

if (key2==true) //if hall sensor 2 is active on Arduino

text("Key-2 Pressed",width/12,height/1.05); //Text and its position

}

//_____End of Calibration______//

//*Function to represent the main Screen*//

void UI()

{

imageMode(CORNERS);

image(Aisha,0,0,width,height);

imageMode(CENTER);

if ((avgX<(width/10+((width/4)/2)) && avgY<(height/8.5+((height/4)/2)) && key1==true) || (movePaint==true&&key1==true)) //if clicked inside the image

{

movePaint=true;

image (Paint, avgX,avgY,width/4, height/4); //Drag the image

}

else if (movePaint==false)

image (Paint, Paintx,Painty,width/4, height/4); //place the image at corner

else

PaintScreen=true;

if ((avgX>(width/1.1-((width/4)/2)) && avgY<(height/8.5+((height/4)/2)) && key1==true) || (moveLED==true&&key1==true)) //if clicked inside the image

{

moveLED=true;

image (LED_Toggle, avgX,avgY,width/4, height/4); //Drag the image

}

else if (moveLED==false)

image (LED_Toggle, LEDx,LEDy,width/4, height/4); //place the image at corner

else

LEDscreen=true;

}

//_____End of main screen function______//

//*Function to represent the Paint Screen*//

void Paintfun()

{

imageMode(CENTER);

background(#0B196A);

image (Paint, width/2,height/2,width/1.5, height);

img.loadPixels();

for (int IX = 210, Px=0; IX<=590; IX++, Px++)

{

for (int IY = 85, Py=0; IY<=375; IY++, Py++)

{

if ((dist(avgX,avgY,IX,IY)<4) && key1==true)

img.pixels[(Px+(Py*img.width))] = color(255); //color of the paint background updated

if (key2==true)

PaintScreen = false;

}

}

img.updatePixels();

image(img, width/2, height/2.6);

}

//_____End of main Paintscreen function______//

//*Function to display Toggle LED screen*//

void LEDfun()

{

imageMode(CENTER);

background(255);

image(LED_on,(width/2 - width/4), height/3,width/4, height/5);

image(LED_off,(width/2 + width/4), height/3,width/4, height/5);

textSize(50);

textAlign(CENTER);

if (key1==true && avgX<300 && avgY>150 && avgX>95 && avgY<260)

{ fill(#751EE8);

text("LED turned on",width/2,height/1.5);

port.write(121);

}

if (key1==true && avgX<700 && avgY>150 && avgX>500 && avgY<260)

{ fill(#FC0808);

text("LED turned off",width/2,height/1.5);

port.write(110);

}

}

//_____End of main LEDscreen function_____//

//*Function to know which key is pressed*//

void key_select() {

switch(data){

case 1:

key1=true; key2=true;

break;

case 2:

key1=false; key2=true;

break;

case 3:

key1=true; key2=false;

break;

case 4:

key1=false; key2=false;

break;

}

}

//_____End of function______//

void draw() {

if (port.available()>0) //if there is an incoming BT value

{

data=port.read(); //read the BT incoming value and save in data

println(key1,key2,data); //print for debugging

key_select(); //toggle the variable key 1 and key2

}

video.loadPixels();

if (calibration==false) //no calibration done

Calibrate(); //Calibrate Screen

if (calibration==true && (PaintScreen==false || LEDscreen==false) )

UI(); //Main Screen

if (PaintScreen==true && calibration ==true)

Paintfun(); //Paint Screen

if (LEDscreen==true && calibration ==true)

LEDfun(); //LED toffle screen

if (key2==true)

movePaint=PaintScreen=PaintScreenClear=moveLED=LEDscreen=false; //go back to main screen

avgX = avgY = count = 0;

// Begin loop to walk through every pixel

for (int x = 0; x < video.width; x++ ) {

for (int y = 0; y < video.height; y++ ) {

int loc = x + y * video.width;

// What is current color

color currentColor = video.pixels[loc];

float r1 = red(currentColor);

float g1 = green(currentColor);

float b1 = blue(currentColor);

float r2 = red(trackColor);

float g2 = green(trackColor);

float b2 = blue(trackColor);

float d = distSq(r1, g1, b1, r2, g2, b2);

if (d < threshold*threshold) {

stroke(255);

strokeWeight(1);

// point((mirror-x)*mirrorn, y);

avgX += x;

avgY += y;

count++;

}

}

}

if (count > 0) {

avgX = avgX / count;

avgY = avgY / count;

// Draw a circle at the tracked pixel

fill(#21FADB);

avgX = (mirror-avgX)*mirrorn;

ellipse(avgX, avgY, 15, 15);

}

}

float distSq(float x1, float y1, float z1, float x2, float y2, float z2) {

float d = (x2-x1)*(x2-x1) + (y2-y1)*(y2-y1) +(z2-z1)*(z2-z1);

return d;

}

void mousePressed() {

if(calibration==false)

{

int loc = mouseX + mouseY*video.width;

trackColor = video.pixels[loc]; //load the color to be tracked

}

}

Comments

hi can we use arduino nano code in arduino uno

Sir i want to know about future scope & application & advantages of this project.

Hi sir, i'm sriram. I'm studying in "Bsc electronics and communication".my finial year project is your virtual reality project.I take your project.it's really good project. but I have a doubt "WHAT IS THE TRACKING OBJECT COMPONENTS IN YOUR PROJECT.

There is nothing like a tracking object here. The program track for any particular color. It can be even your pen cap or something that is of peculiar color in the room.

i cant see key-1 presse and key-2pressed... and cant track color please help sir

It means that there is some problem with hardware. Make sure the there is a successfully bluetooth connection between the laptop and arduino HC-05 and also make sure you have wired the hall sensor properly

I have a problem with the color follower's accuracy and click

Click is a hardware function. So it should work properly.

The problem with color tracking might be due to bad lighting

Bro change on threshold=25 and fill(trackcolour)

Can we workout this project using nodemcu?

Sir when I am clicking on any one sensor than it showing both hall sensor clicked

should be a hardware problem

sir,

i have a confusion on tracking object.actually what is tracking object??? is that any kind of module???

could u explain it cleaarly

The tracking object is just any piece of material of particular colour. It can even be a pen cap

How To install Java on computer

I was connected all connection as you said but it showing key1 ,key2 press

sir how to save the image after drawing

I created this program just to demonstrate what is possible, if you want more functionalities then you should code it by yourself

One crude way would be to take a screen shot

respected sir i am not able to download pricessing.ide please give me the softwerelink.

this is showing virus in my laptop

You can download processing from here https://processing.org/download/

can you please show the connection between Bluetooth and aurdino clearly

When I powered the board with battery, my bluetooth module didn't switch on. But, whereas I connected the usb to my laptop, that time both switched on

Its because the battery cannot provide initial current. Try adding a 10uf capacitor across the vcc(positive lead) and ground (negative lead) terminal of the bluetooth or use a new battery

WHAT IS THE MAIN AIM OF THE PROJECT??

CAN YOU GIVE ME SOME APPLICATIONS FOR THIS PROJECT??

Sir, This Processing IDE should in JAVA mode or ARDUINO mode to execute.

Sir I need ur help in this project

As we use Arduino UNO, will we have to use the same pins for arduino nano for bluetooth, or use the digital 0 and 1 for RX and TX respectively

color currentColor = video.pixels[loc];

float r1 = red(currentColor);

float g1 = green(currentColor);

float b1 = blue(currentColor);

float r2 = red(trackColor);

float g2 = green(trackColor);

float b2 = blue(trackColor);

float d = distSq(r1, g1, b1, r2, g2, b2);

can you please tell me why did you input 2 times the rgb's?

Also where is the part of the code we need to change, to change the color being tracked?

i am facing problem in this project can i get your email id throught which i can contact you

hardware is setuped and it is indicating key1 is pressed and key 2 is pressed but not doing calibration i.e traching of object a grey colour window is displayed with done button

hardware is setuped and it is indicating key1 is pressed and key 2 is pressed but not doing calibration i.e traching of object a grey colour window is displayed with done button

Ty sir it worked