Broadcom Inc. has introduced Tomahawk 6, the world’s first 102.4 Terabits per second Ethernet switch chip, and it’s already started shipping. This new chip is designed for large-scale AI training and inference clusters, providing the highest bandwidth and performance that can be packed in a piece of silicon.

The Tomahawk 6 is twice what its predecessors were in terms of switching capacity, integrating support for both 100G and 200G PAM4 SerDes, and offering flexible deployment via passive copper or co-packaged optics (CPO). It’s built to handle up to 1,024 100 G SerDes lanes on a single chip, reducing system complexity and total cost of ownership (TCO). The chip is meant to accommodate scale-up and scale-out AI architectures, meeting networking demands for nascent 100,000 to 1 million XPU clusters. It supports a wide array of network topologies, including Clos, torus, rail-only, and rail-optimized.

The 102.4 Tbps switching capacity is the most notable of its features. Other key features include support for 200G/100G PAM4 SerDes, incorporation of Cognitive Routing 2.0, support for co-packaged optics, and low-latency links that comply with Ultra Ethernet Consortium standards. In addition, it embraces open standards through the new Scale UP Ethernet (SUE) framework. Tomahawk 6 integrates into Broadcom’s full-stack AO networking platform, which includes Jericho switches, Thor NICs, Agera retimers, and Sian optical DSPs. It also retains compatibility with any Ethernet NIC or XPU, allowing operators to deploy unified scale-up and scale-out AI networks without proprietary lock-in.

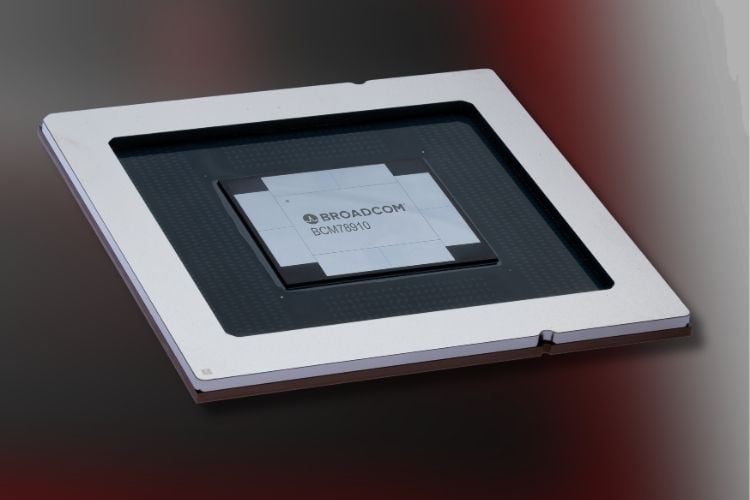

The Tomahawk 6 (BCM78910) is an active L2/3 multilayer switch with flexible I/O configurations. Hyperscale cloud operators have already planned deployments connecting over 100,000 AI processors. Partners, including AMD, Arista, Juniper, Supermicro, and others, are building projects around the Tomahawk 6 to support modern data center and AI workloads. Essentially, the chip is getting real-world use in powerful AI and cloud computing setups soon.