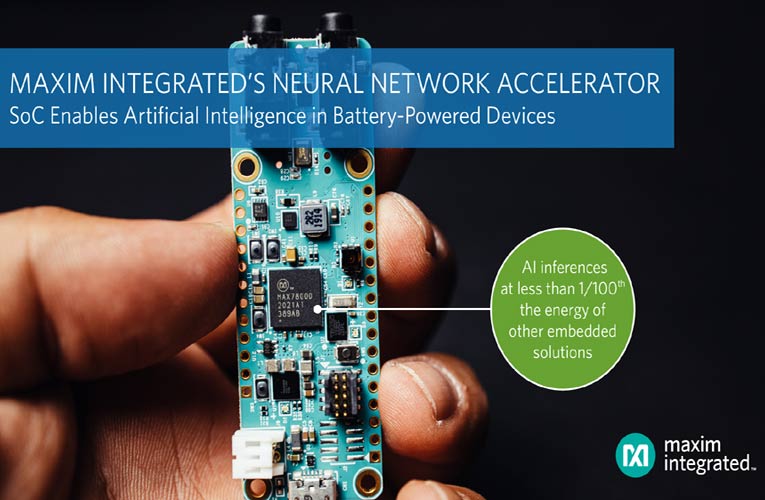

The MAX78000 low-power neural network accelerated microcontroller from Maxim Integrated moves artificial intelligence (AI) to the edge without performance compromises in battery-powered internet of things (IoT) devices. Executing AI inferences at less than 1/100th the energy of software solutions dramatically improves run-time for battery-powered AI applications while enabling complex new AI use cases previously considered impossible. These power improvements come with no compromise in latency or cost: the MAX78000 executes inferences 100x faster than software solutions running on low power microcontrollers, at a fraction of the cost of FPGA or GPU solutions.

AI technology allows machines to see and hear, making sense of the world in ways that were previously impractical. In the past, bringing AI inferences to the edge meant gathering data from sensors, cameras, and microphones, sending that data to the cloud to execute an inference, then sending an answer back to the edge. This architecture works but is very challenging for edge applications due to poor latency and energy performance. As an alternative, low-power microcontrollers can be used to implement simple neural networks; however, latency suffers and only simple tasks can be run at the edge.

By integrating a dedicated neural network accelerator with a pair of microcontroller cores, the MAX78000 overcomes these limitations, enabling machines to see and hear complex patterns with local, low-power AI processing that executes in real-time. Applications such as machine vision, audio, and facial recognition can be made more efficient since the MAX78000 can execute inferences at less than 1/100th energy required by a microcontroller. At the heart of the MAX78000 is specialized hardware designed to minimize the energy consumption and latency of convolutional neural networks (CNN). This hardware runs with minimal intervention from any microcontroller core, making operation extremely streamlined. Energy and time are only used for the mathematical operations that implement a CNN. To get data from the external world into the CNN engine efficiently, customers can use one of the two integrated microcontroller cores: the ultra-low-power Arm® Cortex®-M4 core, or the even lower power RISC-V core.

AI development can be challenging, and Maxim Integrated provides comprehensive tools for a more seamless evaluation and development experience. The MAX78000EVKIT# includes audio and camera inputs, and out-of-the-box running demos for large vocabulary keyword spotting and facial recognition. Complete documentation helps engineers train networks for the MAX78000 in the tools they are used to using: TensorFlow or PyTorch.

Key Advantages

- Low Energy: Hardware accelerator coupled with ultra-low-power Arm M4F and RISC-V microcontrollers moves intelligence to the edge at less than 1/100th the energy compared to closest competitive embedded solutions.

- Low Latency: Performs AI functions at the edge to achieve complex insights, enabling IoT applications to reduce or eliminate cloud transactions and cuts latency over 100x compared to software.

- High Integration: Low-power microcontroller with neural network accelerator enables complex, real-time insights in battery-powered IoT devices.

Availability and Pricing

- The MAX78000 is available from authorized distributors; pricing available upon request.

- The MAX7800EVKIT# evaluation kit is available for $168.

- For details about Maxim Integrated’s Artificial Intelligence solutions, visit http://bit.ly/Maxim_AI