OpenNCC NCB from EyeCloud.AI is an accelerated AI reference platform designed for edge-ai applications and comes in two variants, a USB version and an FPC version. It is a complete, open-source replacement for Intel’s Neural Compute Stick2 (NCS2) and is built with Movidius Myriad X VPU which is Intel’s first VPU to feature the Neural Compute Engine. The Neural Compute Engine, in conjunction with the 16 powerful SHAVE cores and high throughput intelligent memory fabric, makes Intel Movidius Myriad X ideal for on-device deep neural networks and computer vision applications.

OpenNCC NCB can support up to a 6-way reasoning pipeline configuration locally, and a 2-way pipeline can run concurrently in real-time. Users can realize multi-level chain or multi-model concurrency of reasoning through intermediate processing of the Host App. Users can expand the number of NCB according to their computing power needs, and the SDK will dynamically allocate computing power.

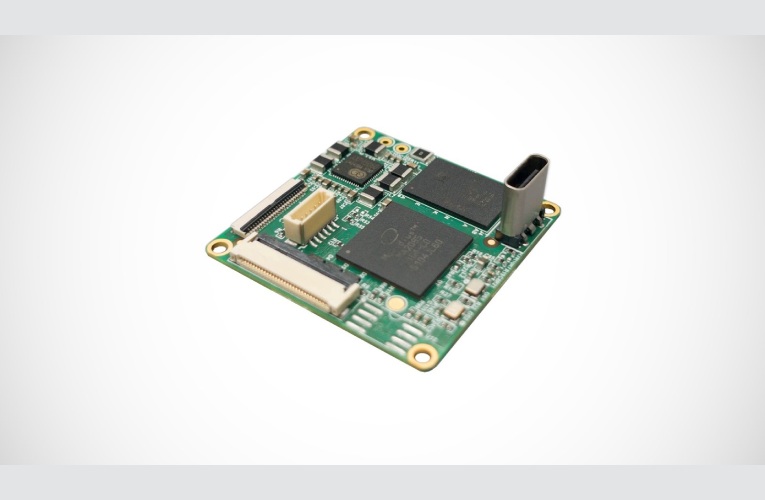

Features & Specifications

- VPU: Intel Movidius Myriad X MV2085

- Vector Processors: 16 SHAVEs

- Vision Accelerators: 20+

- Neural Network Capability: Two Neural Compute Engines (Up to 4 TOPS)

- RAM: 8 Gb, LPDDR4 (1600 MHz, 32-bit)

- Power Supply: 5 V / 2 A

- Data Interface: USB 3.0 / USB 2.0 with USB Type-C OR USB 2.0 with FPC

- Dimensions: 1.5" x 1.5" (38 mm x 38 mm)

- Board Weight: 0.02 lbs (9 g)

- Supports working with Arm Linux SoCs and Raspberry Pi boards

- Supports on-board inference AI models

- Compatible with Intel's Distribution of OpenVINO (Verison 2020.3,2021.4)

It functions as the Intel NCS2 with a host machine. The Host App on the Host Machine obtains the video stream (local file, IPC, webcam, or V4L2 Mipi CAM for example) from the outside, configures the preprocessing module according to the resolution and format of the input file of the reasoning model, then sends the visual stream to the OpenNCC NCB through the OpenNCC Native SDK for reasoning and returns the reasoning results. The reasoning supports asynchronous and synchronous modes. The reasoning pipeline on OpenNCC SoM is configured through OpenNCC model JSON.