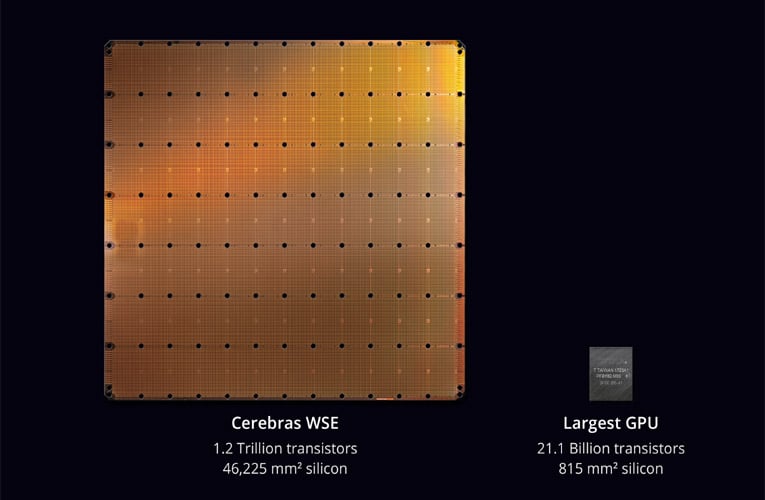

Cerebras Systems, a US based startup, launched largest chip integrating more than 1.2 trillion transistors and sized at 46,225 square millimeters. The new Cerebras Wafer Scale Engine (WSE) chip is optimized for AI and is 56.7 times larger than the largest graphics processing unit that measures 815 square millimetres and contains 21.1 billion transistors. The new Cerebras Wafer Scale Engine (WSE) provides 3,000 times more high speed, on-chip memory and comes with 10,000 times more memory bandwidth. The larger size of the chip ensures that information can be processed more quickly and can even reduce the time-to-insight, or “training time” which enables the researchers to test more ideas, use more data and solve new problems.

The Cerebras WSE is designed for AI and contains fundamental innovations that advance state of the art by solving decades-old technical challenges that is limited chip size – such as cross-reticle connectivity, yield, power delivery and packaging. The WSE can accelerate calculations and communications, which reduces training time. The WSE has 56.7 times more silicon area than the largest graphics processing unit. Also, The WSE can provide more cores to do more calculations and features more memory closer to the cores, so the cores can operate efficiently. All the communication is kept on silicon itself because of its vast array of cores and memory are embedded on a single chip.

The Cerebras WSE chip contains 46,225mm2 of silicon and houses 400,000 AI-optimised, no-cache, no-overhead, compute cores and 18 gigabytes of local, distributed, superfast SRAM memory. The chip comes with 9 petabytes per second of memory bandwidth where cores are linked together with a fine-grained, all-hardware, on-chip mesh-connected communication network that delivers an aggregate bandwidth of 100 petabits per second. This means that the low-latency communication bandwidth of WSE is extremely large which make the groups of cores to collaborate with maximum efficiency, and memory bandwidth is no longer a bottleneck. More local memory, more cores and a low latency high bandwidth fabric combined together forms the optimal architecture for accelerating AI work.

The features of Cerebras WSE chip:

- Increased cores: The WSE integrates 400,000 AI-optimized compute cores called as SLAC (Sparse Linear Algebra Cores) which are programmable, flexible, and optimized for the sparse linear algebra which underpins all neural network computation. SLAC’s programmability feature ensures that the cores can easily run all neural network algorithms in ever changing machine learning field. The WSE cores incorporate Cerebras-invented sparsity harvesting technology that accelerate computational performance on sparse workloads (workloads that contain zeros) like deep learning.

- Enhanced Memory: The Cerebras WSE integrates more local memory along with more cores which is more than any chip that enables flexible, fast computation at lower latency and with less energy. The WSE comes with 18 GB (Gigabytes) of on-chip memory accessible by its core in one clock cycle. This collection of core-local memory makes the WSE to deliver an aggregate of 9 petabytes per second of memory bandwidth which is 10,000 X more memory bandwidth and 3,000 X more on-chip memory than the graphics processing unit has currently.

- Communication Fabric: The Cerebras WSE uses Swarm™ communication fabric which is the interprocessor communication fabric that makes it to achieve breakthrough bandwidth and low latency at a fraction of the power draw of the traditional communication techniques. The Swarm communication fabric gives a low-latency, high-bandwidth, 2D mesh that links all 400,000 cores on the WSE with an aggregate 100 petabits per second of bandwidth. Swarm also supports single-word active messages which can be handled by receiving cores without any software overhead. The hardware handles routing, reliable message delivery, and synchronization whereas software configures the optimal communication path through the 400,000 cores to connect processors according to the structure of the particular user-defined neural network being run.