Although software is supposedly “eating the world,” it is heavily restricted in doing so by access to development talent and the increasing number of tasks required to build software. The number of jobs requiring software developers is increasing at a rate that vastly outpaces the number of skilled professionals entering the market to fill those roles. Even for those already in a programmer role, most of their time is not necessarily spent coding new features but rather writing tests, patching security issues, reviewing code, and fixing bugs. These two factors make it even more important to boost the productivity of those in the workforce, and the most recent improvements in AI-driven natural language processing (NLP) models are making that a reality. By virtue of their size, underlying architecture, and training data and regime, the newest generation of state-of-the-art NLP models—called generative pre-trained transformers (GPTs)—can translate between many languages, including from text to code. Embedding this powerful ability into tools that developers can use is already proving invaluable at making developers better at their jobs and unlocking software production for less technical folks.

Software Struggles to Eat the World

Along with the explosion of software for all sorts of use cases and business applications in the past decade plus, an equivalent has emerged for the number of jobs that require coding experience to build that software. A survey by Code.org in 2017 showed an estimated 500,000 open programming roles available in the United States alone, but unfortunately, many go unfilled, especially since only 43,000 computer science graduates enter the market every year, a figure that has decreased in recent years. On top of that, the time usually taken to become skilled enough to take those jobs ranges from 3 to 5 years; by the time a programmer is ready to enter a more senior role, the number of available roles will have increased by 28% (U.S. Bureau of Labor Statistics). As such, the bottleneck of talent stymies many companies’ attempts to build impactful software.

Once a team of developers is in place within a company, the challenges don’t stop. Requirements for building software, particularly in terms of quality, security, and speed of delivery, are increasingly complex. Surprisingly, developers will spend only about 30%–40% of their time developing new features or improving existing code (Newstack). This is because a large portion of their work also includes writing tests, making fixes, and solving security issues. Senior developers will also spend a portion of their time mentoring the juniors on their team and performing code reviews. All of these elements combined can impact delivery speed and cost-effectiveness of a software project. It also provides the perfect opportunity to pair AI with human developers to help address a lot of these drawbacks.

AI + Developers = Pair Programming Dream Team

AI-driven Coder Tools

With the strides that deep learning–based NLP has made in the past couple of years, a number of tools have emerged with AI at their core, designed to improve the productivity and code quality of developers. In particular, the models that these tools use can parse code to identify bugs and flaws, effectively performing some of the more tedious parts of a code review. A few such tools released recently, like CodeGuru and DeepCode, were able to find vulnerabilities that were difficult for humans to identify as well as find that 50% of the pull requests studied had issues (AI-News).

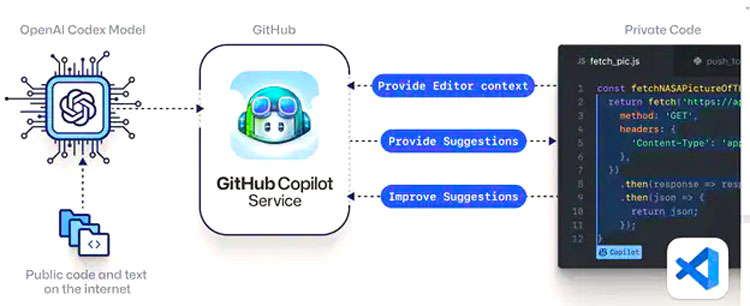

Additionally, modern NLP techniques improve developers' code quality and speed up development by helping auto-complete sections of code, monitor their code output for errors, and even auto-generate unit tests. OpenAI’s Codex algorithm, which was incorporated into GitHub’s Copilot, can do this with astounding accuracy, even going so far as to generate code from human language input (Figure 1). Its ability to do this comes from the data it was trained with, both natural language fragments and a vast amount of code. A preliminary study from GitHub on its performance showed that for a mundane task like writing an HTTP server, leveraging AI alongside a developer reduced time to completion by half. The fact that the model underpinning this tool can auto-complete entire code sections from a single comment also makes coding vastly more accessible to beginners and less technical folks.

Coding Made More Accessible

The powerful AI translation capabilities of this new generation of NLP models means that, within some limits, anyone can use human language to produce snippets of code that they need. These pieces of software can be in any programming language as well, from those used to execute certain routines like Python, JavaScript, and C++ to those used to access data in various databases like SQL and NoSQL. Provided that the program a person would like to code is not overly complicated, tools like Codex can likely help. It has proven useful for making small websites, deriving excel functions, and converting what a user would like from human language to the query language used to access the data. However, as the researchers of these techniques note, the models are not totally correct all the time. Often, the code produced is mostly correct but requires some intervention from an experienced developer. In this sense, the models can boost the productivity of a human coding tutor in that they can take over where the AI runs into issues as it is being used by a less experienced person. This can also mean that junior developers’ productivity is greatly increased while their requirement for supervision and senior input is reduced.

From Human Language to Computer Language

So how do most of these tools work? The main workhorse powering these innovative tools is the GPT—typically the third generation of such models, called GPT-3. This architecture was initially developed by OpenAI and trained on a massive amount of text from across the internet, including prose, digital books, tweets, open-source repositories, comments, online articles, and more. Although the goal was always more realistic language generation, the side effect of the model also being able to generate code led to the later development of Codex.

Several factors separate GPT generations from previous deep learning–based NLP models. These include the amount of data used for training and the way in which the models were trained as well as the number of parameters the models have and their state-of-the-art underlying architecture. Since these models and their predecessors are neural networks, the number of parameters influences the complexity of relationships in data that they can capture, meaning that bigger models can learn more nuanced patterns than smaller models. These models were also trained in a multitask setting and in a self-supervised fashion. Most neural networks are made to perform a single task and as such take specifically-labeled data in order to learn how to do that task—a great example being AlphaGo, which is great at GO but cannot play chess. Requiring labeled data is called supervised learning. The GPT-3, by contrast, was trained to predict the next word in a sequence, so the data don’t need labeling; this is the backbone of many tasks like translation, text generation, and question answering.

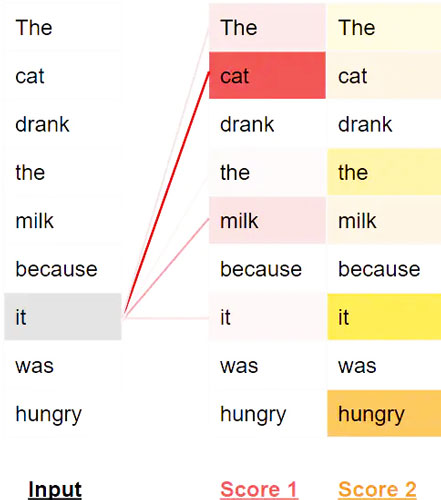

Additionally, there are transformer models, which perform better than previous NLP benchmarks like long short-term memory models or recurrent neural networks. They process entire sentences at once instead of word by word, store and use similarity scores between words in a mechanism called “attention,” and encode information related to a specific position of a token in a sentence (Figure 2). All of this allows for a bigger model that can learn more since parallelization and recursion are no longer issues, as they were in the past. It also removes the difficulties previous models had with forgetting relationships between far-apart words and sentences. Since the GPT-3 is available through an application programming interface provided by OpenAI, it can be incorporated into other AI for coding products, which democratizes access to coding even further.

Conclusion

In order to continue building the vast amount of software needed to cover all the applications that require it, AI is stepping in to help boost the productivity of developers. Since the demand for programmers clearly outstrips the supply, leveraging other solutions to augment the output and code quality of coders companies already have is proving more and more beneficial. With the recent dramatic improvements in AI-based NLP models, such as the particularly powerful GPT-3, the dream of an AI-powered pair programmer for human developers is becoming a reality. With such models embedded in their everyday tools, programmers can stand to gain a lot in terms of reduced time spent on repetitive tasks like writing tests and improved code quality from automated reviews and auto-generated snippets. Even junior developers and less technical folks can benefit from the text-to-code capabilities now available. Software may not be able to eat the world alone, but AI can certainly help.

About the Author

Becks is a fullstack AI lead at Rogo, a New York-based startup building a platform to allow anyone to analyze and gain insights from their own data without a background in data science. In her spare time, she also works with Whale Seeker, another startup using AI to detect whales so that industry and these gentle giants can coexist profitably. She has worked across the spectrum in deep learning and machine learning from investigating novel deep learning methods and applying research directly for solving real world problems to architecting pipelines and platforms to train and deploy AI models in the wild and advising startups on their AI and data strategies.

Original Source: Mouser